Many believe chatbot experiences still feel far too rigid, robotic, and predefined.

Thankfully, generative AI (GenAI) embedded into conversational AI platforms may soon start to shift this tired yet largely prevalent narrative.

After all, GenAI is flexible, human-like, and acts on the fly – counteracting current conceptions of customer-facing AI.

All these qualities enable better chat experiences, as many of the following use cases exemplify.

They also highlight how GenAI is paving the way for faster, more efficient bot-building.

Lastly, they’re cutting-edge. Indeed, each use case is now available on the Cognigy and/or Kore.ai conversational AI platforms.

These are two of Gartner’s three “Customer’s Choice” enterprise conversational AI solutions.

1. Automating More Customer Queries

Example: Cognigy Knowledge AI

A customer support team will have many sources of knowledge to lean on. That includes a knowledge base, web links, and product manuals.

After understanding customer intent, a GenAI tool may parse all these materials to find the closest semantic match between a piece of knowledge and the query.

Once located, the tool sends the original query and the piece of knowledge back through an LLM, with a prompt, such as: “Answer the following customer question based on the found article.”

As a result, contact centers can expand the scope of conversation automation beyond pre-trained chatbots – increasing containment rates.

Moreover, if the source information the bot used to solve the query is publicly available, it may also share that via a link – alongside the answer – so the customer can dig deeper.

Finally, some conversational AI platforms may strip insights from images within source materials – such as charts, tables, and diagrams – to inform their responses.

All this without the business creating a chatbot prototype.

2. Suggesting Intents to Automate

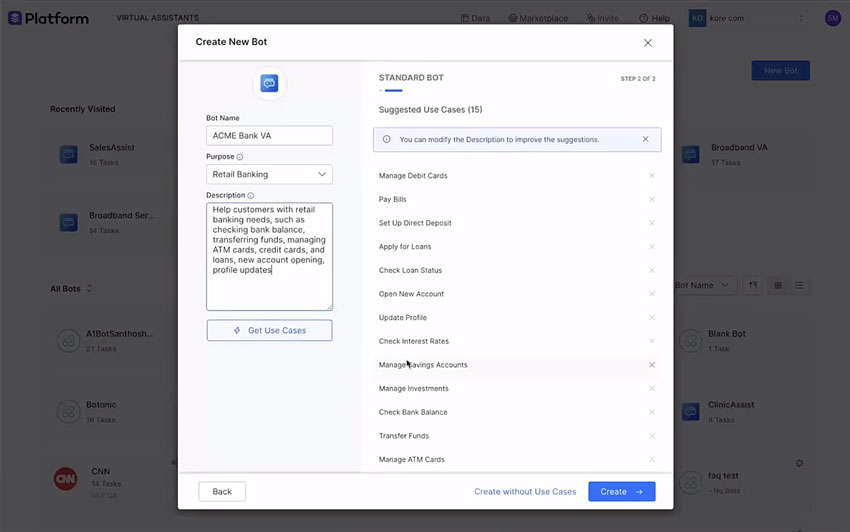

Example: Suggest Use Cases by Kore.ai

Once the developer specifies the purpose of their bot, Kore.ai’s conversational AI platform automatically suggests additional use cases.

For example, say the developer writes that the bot’s purpose is to offer customer support for a bank. The platform may then leverage LLMs to list potential intents to automate.

These could include checking a bank balance, transferring funds, and managing loans.

The developer can then enter a description to unlock more use cases, which they can add to the chatbot – as the screenshot below highlights.

They may then select use cases for the bot from this list and – with the click of a button – generate prospective bot flows across each intent (more on this below!).

3. Generating New Bot Flows

Example: AI-powered Flow Generation by Cognigy

Conventionally, companies create chatbots from scratch on an empty canvas.

However, brands may now build bot flows from only natural language descriptions.

Cognigy’s offering places a panel next to these auto-generated bot flows for developers to test the simulated chat and voice experiences.

Based on their findings, the developer can adapt, expand, and finetune the flow as required. They may also connect it to various APIs to complete the experience.

Some models – including those offered by Cognigy and Kore.ai – may even define the necessary API integration to complete the flow.

4. Creating Lexicons

Example: AI-powered Lexicons by Cognigy

A lexicon is a vocabulary set that businesses drill into their bots so they understand the jargon that customers and employees often use.

Lexicons may cover everything from shorthand terms for pizza toppings to airport codes, such as “LAX” for Los Angeles International Airport.

A GenAI tool may auto-generate such a list of terms from a simple description. To use the airport example, the user may type a message like the following into a GenAI-powered solution:

A lexicon containing European Airport codes, like “AMS” and “CDG”.

The tool may then create such a Lexicon, which the airline can review, finetune to their flight plan, and embed into their bots.

5. Producing New Training Data

Example: AI-powered Training Data by Cognigy

Previously, chatbot designers had to plug all the possible ways a customer could ask a question into their bot for each intent.

Advances in NLU have improved this process. Yet, generative AI has taken it further by training NLU models by auto-generating long lists of customer utterances that signal a specific intent.

Again, consider an airline example, where a developer wants to build out a set of queries a customer would ask when they’ve lost their luggage.

They simply have to write something into the GenAI tool, such as:

A collection of customer utterances who lost or cannot find their luggage.

And voila, they have a list to edit – and possibly enrich with production data – that will help accelerate the time they spend prototyping chatbots.

In doing so, developers can ensure the chatbot functions across all these utterances, even those with misspellings and grammatical issues.

6. Finetuning Intent Modelling

Example: Utterance Testing by Kore.ai

NLU models that understand customer intent existed long before the advent of LLMs. Many worked well but struggled with disambiguation.

As a result, intent engines sometimes churn out false positives, and the customer kickstarts a journey they don’t want to be on: cue confusion and frustration.

By combining LLMs and machine learning, Kore.ai matches a customer query with various possible intents and gives each a confidence score. It then suggests the intent with the highest confidence score, which is most likely correct.

If none meet the high confidence score threshold, a developer can defer the intent and finetune the model.

As such, the next time a customer uses that utterance, they’ll follow the most likely correct journey.

7. Giving the Chatbot a Personna

Example: GPT Conversation Node by Cognigy

Generative AI can flex to answer questions in various tones. Brands may leverage this capability to give their chatbots a character.

Indeed, some solutions allow developers to specify the bot’s persona. Whether they want it to be “professional and patient” or “empathetic and quirky” – the bot will churn out a response in their chosen style.

Cognigy – for instance – even allows businesses to specify the “strictness” of their bot. Should it stick to the task, engage in trivia and small talk, or go “completely freestyle”? These are all options.

For now, such elements come with the additional risk of hallucination. Yet, as LLMs improve, that danger will gradually decrease.

8. Keeping Conversations on Track

Example: Digression and Corrections by Kore.ai

It’s straightforward enough to design an interaction that follows a logical flow. Yet, customer conversations are rarely like that.

Customers will ask unexpected questions, change their minds, and sometimes even alter their intent. At these points, automated conversations typically break down.

Yet, GenAI can detect that change in focus. From there, it will either drag the conversation back on track or pull the conversation back to an earlier stage if the customer wishes to correct a previous response.

9. Monitoring Customer Sentiment

Example: GPT Prompt Node by Cognigy

LLMs can detect customer sentiment in real-time, and some GenAI applications leverage this capability to score a customer’s happiness after each reply.

Such a score is an excellent metric to monitor bot performance across intents and is more accurate than other sentiment analysis models.

Consider the following example shared by Cognigy. It includes two customer replies that are semantically similar. Yet, they receive very different sentiment scores on a scale of one to five.

Why? Because the latter statement includes sarcasm, which the GenAI tool has picked up on and factored into its sentiment score.

Those scores may filter through to the CRM to inform possible marketing, sales, and retention initiatives. Yet, they may also trigger a real-time escalation to a live agent – if the score is poor after several customer replies.

10. Auto-Summarizing Automated Conversations

Example: Smart Assist by Kore.ai

There are already many generative AI use cases for customer service – and one of the most widely deployed is auto-summarizing customer conversations.

That involves reducing the customer transcript into four or five conversation highlights. The agent then uploads this to the CRM for greater insight into the customer journey.

Yet, generative AI chatbots may also do so for automated conversations, combining the summary with a disposition tag and case status note – i.e., resolved or unresolved.

What to Expect In the Future

As LLMs evolve and expand, chatbot providers place more emphasis on orchestrating various models and optimizing them for particular use cases and costs.

Of course, new use cases will come to the fore. Some of these will focus on further streamlining the bot-building experience. Yet, new possibilities will also come to the fore.

For instance, consider how the latest iteration of ChatGPT – GPT-4 – allows for visual inputs. It may also analyze and classify such visuals.

In bringing such a capability to the table, conversational AI vendors may further increase the scope for conversation automation.

Yet, in the short term, expect use cases like the above to break down the barriers to adopting chatbots. These include limited data sets, extensive developer expertise, and long conversational design processes.

Instead, GenAI is helping to put conversational AI platforms into the hands of less IT-focused CX experts, which may encourage a significant increase in chatbot adoption.

For more on the potential of generative AI in the CX realm, watch our video: Generative AI In the Contact Center: Announcements, Use Cases, & Future Possibilities