Right now, it seems as if every tech vendor is innovating with generative AI.

Yet, as the trend accelerates, more experts have started to voice their concerns over the development of AI.

These concerns cover many spaces, and the enterprise is one of them.

There, possible risks include generative-AI-based applications sending fabricated information to customers and creating copyright breaches.

However, perhaps most worryingly, concerns remain over how they may endanger data privacy.

Microsoft recently moved to quell these by announcing plans to release a private mode for ChatGPT: PrivateGPT.

Hosted on dedicated servers, PrivateGPT will run “advanced privacy protection methods” on copies of the AI software to avoid data leaks.

So, problem solved, right? Perhaps, for now. Yet, additional risks may scupper future use cases of generative AI in the enterprise.

The Current Vision for Generative AI In the Enterprise and Its Flaws

The future of generative AI in the enterprise is not only for text generation. The next frontiers will be image and video models.

So far, these models have not digested all of the videos on YouTube or the podcast audio on Spotify. Yet, when they do – which is the direction of travel – enterprises will repurpose and feed them with massive corpora of audio and visual data.

Then, as more of the communication inside of businesses moves from email and messaging to video calls, recorded meetings, and audio notes, the conversation will move beyond companies wanting private versions of ChatGPT to upload their own text data.

Instead, businesses will want their own multimodal assistants running inside of these experiences.

Microsoft has painted that vision with Copilot. Yet, James Poulter, CEO of Vixen Labs, points out a rather large assumption within those plans that may limit its potential.

“[With Copilot] it seemed a given that we’ll all be happy to have this running in the background of our Teams calls,” he said during CX Today’s latest BIG News Show. “But I think Microsoft is going to start hitting lots of significant hurdles with their big enterprise customers.

They will not be happy that all of those video calls are going to be auto-transcribed and uploaded, and that’s why significant orchestration around secure environments has got to happen.

For starters, end-to-end encryption of enterprise systems that leverage generative AI must happen, with encryption on either prompts or replies.

Then, there is on-cloud encryption, which ensures that generative AI applications stay away from some of the data stored within SaaS systems.

These are just two of the significant hurdles that enterprises will likely have to overcome.

Making this point, Poulter stated:

The history of the internet is giving up privacy for utility. We’re used to that as individuals, but – as companies – I just don’t think that we are willing to do that transaction in the same way.

Moreover, the risk magnifies considerably when so many enterprise applications coalesce around just one of three or four big tech providers.

Will History Repeat Itself?

Vendors must keep enterprise security at the forefront of their innovation strategy when building multi-media generative AI applications like Copilot.

Moreover, they should clearly communicate how brands can ensure the safe use of their solutions.

Amazon Alexa taught us this five or six years ago when it gave us a crash course in the complex relationship between a mainstream darling technology and corporate defensibility.

Indeed, turn back the hands of time, and the smart speaker was all over the place and all that anyone wanted to talk about. It appeared to have a vast blue ocean of opportunity to go anywhere – including into boardrooms to help revise how the office worked.

Then, what happened? A slew of negative press, sharing stories of how Alexa took a conversation from someone’s bedroom in one house and put it into another one.

Next, the FBI used it to solve a murder in Florida, and so on and so forth.

Making this point, Bradley Metrock, CEO of Project Voice, stated: “All of a sudden, the tone changed… I feel like we’re watching almost the same story play out here.

Consider Microsoft. Look at the brand. It’s clean, unoffensive, and well-pruned, similar to how Google used to be. All it takes is one wrong story to start a Cavalcade of stuff that changes everything.

Now, negative press was perhaps not the only reason Alexa didn’t make it in corporate America. A lot of it has to do with the architecture of how it captured what someone said, where that data resides, and who has access to it.

Yet, without clear communication of the steps vendors are taking to keep their solutions secure, history could repeat itself – on a much larger scale.

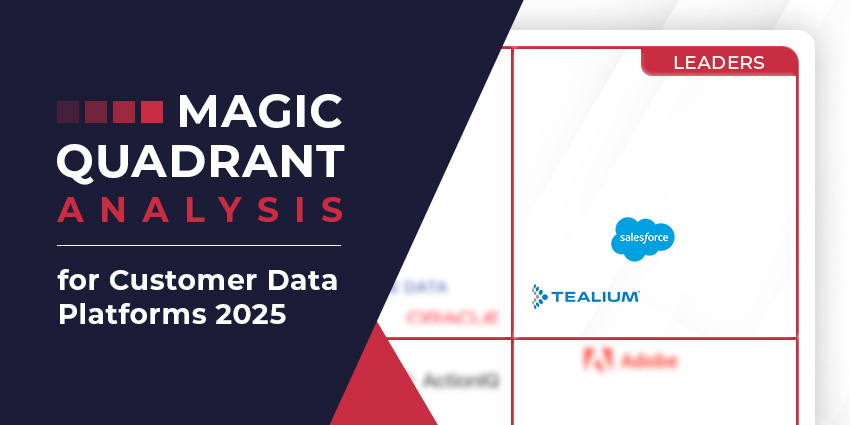

Catch up on Gartner’s advice for securing enterprise generative AI applications by reading our article: The Risks of Using Generative AI In Your Business