Speak to frontline agents and CX leaders about the future of customer service, and you’ll notice something that doesn’t show up in the metrics dashboards or vendor presentations.

AI was supposed to make contact center work easier. That was the promise, anyway. Fewer repetitive tasks. Smarter routing. Real-time guidance that helps agents deliver better service without the stress.

But when you sit down with agents away from their supervisors and their performance scores, many will tell you a different story. They’re tired. Not from handling difficult customers or meeting ambitious targets, but from something more subtle and harder to name.

They’re exhausted from being watched, guided, and scored by systems that never stop paying attention.

The Well-Intentioned Digital Supervisor

The technology itself is impressive. Modern AI systems can now analyze 100% of customer interactions rather than the traditional 1-3% sample size, tracking everything from sentiment shifts to script adherence in real time.

Contact centers have embraced these tools enthusiastically. According to Omdia’s 2025 Digital CX Survey, the top reason North American contact center leaders invest in AI-powered CX technologies is to minimize agents’ cognitive load, stress levels, and burnout.

The intentions are good. AI drafts responses during live calls. It surfaces customer data automatically. It whispers coaching advice through headsets. It summarizes interactions the moment they end.

For supervisors, the benefits seem clear. Dashboards display sentiment scores, performance metrics, and coaching opportunities across the entire team. No interaction goes unanalyzed. No pattern goes unnoticed.

But here’s what the metrics don’t capture: According to Omdia’s survey, 75% of North American contact center leaders expressed concern about AI’s impact on agents’ well-being.

Something isn’t working as planned.

The Cognitive Tax of Constant Guidance

Consider what it’s like to work with an AI assistant that never stops helping.

You’re on a call with a frustrated customer. The AI pops up a suggested response. You’re already forming your own reply based on years of experience, but now you need to decide whether to use the suggestion or follow your instinct. That’s one decision.

The system flags the customer’s rising frustration. You already sensed it, but the alert adds pressure. That’s another decision.

Your response goes slightly off-script. The AI doesn’t flag it as an error, but your performance score adjusts slightly. You notice. That’s mental energy spent wondering if you made the right choice.

According to research on AI in contact centers, these AI-based tools are often used to intensify monitoring through AI-enabled cameras and perpetual real-time coaching, which drives up stress and burnout, increases insecurity, and decreases satisfaction at work.

Psychologists call this vigilance tax. It’s the extra cognitive effort required to monitor and correct a system that’s supposed to reduce your workload. Every time an agent pauses to evaluate an AI suggestion, or worries about how their natural response will score against the algorithm, they’re paying that tax.

Recent data shows 87% of agents report high stress levels, and over 50% face daily burnout, sleep issues, and emotional exhaustion. The paradox is striking: technology designed to help is contributing to the problem it was meant to solve.

From Assistance to Algorithmic Management

The shift has been gradual but significant. AI tools don’t just assist anymore. In many contact centers, they manage.

AI-based coaching software serves as an algorithmic manager, providing automated coaching and direction during calls while also generating performance data that can influence variable pay, training, or disciplinary recommendations.

The system decides which calls agents take. It monitors tone of voice. It measures empathy. It tracks when agents pause, when they deviate from suggested language, and when they take too long to respond. All of this data feeds into performance evaluations that can affect pay, promotion opportunities, and job security.

Research indicates around 70% of workers feel that performance data is used primarily for disciplinary purposes rather than skill development, creating a sense of constant surveillance rather than support.

One agent described it this way: “I feel like I’m in a driving test that never ends. Even when I know I’m doing the right thing for the customer, I’m wondering how the algorithm will score it.”

The Reality Behind the Dashboard

Let me share a real-world scenario that plays out across contact centers every day.

An experienced agent handles a complex financial services call. She’s been with the company for five years and knows the products inside out. A customer is confused about a recent charge.

Her AI assistant, trained on thousands of similar interactions, immediately suggests a scripted response that technically addresses the question. But the agent senses the customer needs something different. A more personal explanation. An acknowledgment of their frustration before diving into the details.

She follows her instinct. The call ends well. The customer is satisfied.

But her performance score for that interaction dips slightly because she deviated from the AI’s recommendation. Her supervisor doesn’t question it, but she notices. Next time, she hesitates a fraction longer before trusting her judgment.

These trends are bad for workers, as they drive up stress and burnout, increase insecurity, and decrease satisfaction at work.

Multiply this scenario across hundreds of interactions, and you begin to understand why agents feel drained not by customers, but by the technology layer between them and their work.

As AI Takes Routine Work, Complex Calls Intensify

There’s another dimension to this challenge that deserves attention. As AI takes over routine customer inquiries, agents face more complex interactions, ramping up their stress levels.

The automation of simple queries means agents spend more of their day handling difficult situations. Angry customers. Technical problems that defy standard solutions. Emotionally charged conversations that require empathy and judgment.

These interactions are inherently more demanding. When combined with real-time AI monitoring and guidance, the cognitive load becomes significant.

Studies show more than 68% of agents receive calls at least weekly that their training did not prepare them to handle. The AI can suggest responses, but it can’t fully prepare agents for the emotional complexity of these conversations.

What CX Leaders Can Do Differently

The solution isn’t to abandon AI. The technology offers genuine benefits when implemented thoughtfully. But contact center leaders need to recognize that automation and monitoring tools create new forms of stress even as they solve old problems.

Here’s how forward-thinking CX leaders are addressing this balance:

Design for Support, Not Control

AI should augment agent capabilities without dictating every decision. Agents need space to use professional judgment and apply empathy in ways that algorithms can’t anticipate.

Be Transparent About How Scoring Works

Hidden metrics erode trust. When agents understand how performance algorithms work and what they’re measuring, they feel less like they’re being judged by an inscrutable system.

Train for Confidence Alongside Compliance

The goal should be helping agents use AI as a tool they control, not a test they must pass. Training programs should emphasize when to follow AI suggestions and when to override them.

Monitor Wellbeing as Closely as Metrics

Track emotional exhaustion, cognitive load, and job satisfaction with the same rigor applied to average handle time and customer satisfaction scores. According to ICMI research, 45% of organizations and 55% of contact centers do not measure employee satisfaction or stress levels.

Protect Agent Autonomy

Give agents the ability to override AI recommendations when they know what’s best for the customer. Document these overrides not as failures, but as examples of professional judgment that can improve the AI’s training data.

Limit Real-Time Interventions

Constant suggestions and alerts increase cognitive load. Consider whether agents truly need real-time guidance on every interaction, or whether post-call coaching might be more effective for development.

By addressing the root causes of burnout and promoting strategies that balance AI efficiency with human wellbeing, we can create a more sustainable and productive contact center environment.

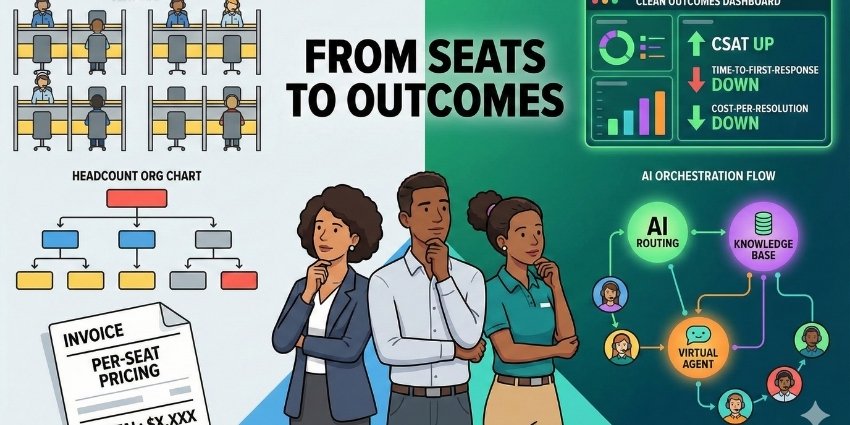

The Economic Reality

The business case for getting this right is compelling. According to Frost & Sullivan, replacing a single agent can cost $30,000 to $40,000. For an organization with 1,000 agents and an attrition rate of 40%, this translates to as much as $16 million in annual replacement costs alone.

Industry data shows the average attrition rate has risen from 42% in 2022 to 60% currently. While multiple factors contribute to this increase, the relationship between AI implementation and agent wellbeing cannot be ignored.

When experienced agents leave, they take valuable knowledge and skills that aren’t easily replaced. New hires require time to develop the nuanced judgment that makes customer interactions successful. This can lead to longer call-handling times, increased error rates, and lower customer satisfaction scores.

A Call for Thoughtful Implementation

The contact center industry stands at a crossroads. AI technology will continue advancing. Automation will expand. The question isn’t whether to adopt these tools, but how to implement them in ways that empower rather than exhaust the humans who use them.

Contact center agents are often strongly motivated to provide high quality customer service, and they embrace these technologies where they are adopted in a way that supports employees, allowing them to better apply or develop their skills and to be more effective in their jobs.

The path forward requires recognizing that the best AI systems don’t replace human judgment. They amplify it. They provide information and support without demanding constant attention or creating anxiety about algorithmic scoring.

Companies that figure this out will have a significant advantage. They’ll retain experienced agents who develop deeper expertise over time. They’ll deliver better customer experiences because their agents aren’t mentally exhausted from managing the technology layer. They’ll build cultures where AI is genuinely seen as a helpful tool rather than an ever-present evaluator.

Here’s What Keeps Me Up at Night

AI can listen faster, analyze deeper, and remember more than any human ever could. But it doesn’t understand what it means to be trusted by a customer in a moment of frustration. It doesn’t know what it feels like to have your professional judgment constantly scored and compared to an algorithm’s recommendation.

As AI capabilities expand, contact centers face a defining question that will shape the future of customer service work: Are we building technology that empowers people to do their best work, or are we creating systems that quietly train them to stop thinking for themselves?

The human voice, with all its imperfections and intuitive understanding, remains what customers trust most. The challenge for CX leaders is ensuring that the technology we implement strengthens that human connection rather than making it harder to maintain.

No amount of automation can replace the judgment of an experienced agent who truly understands their customer’s needs. The question is whether we’re giving those agents the space and support to use that judgment, or whether we’re measuring and monitoring them into exhaustion.

The answer will determine not just the future of contact center work, but the quality of customer experience itself.

Ready to join the conversation? Connect with 40,000+ CX professionals in our LinkedIn community to share your experiences with AI implementation and agent wellbeing. Subscribe to our weekly newsletter for the latest insights on building contact centers that work for both customers and the people who serve them.