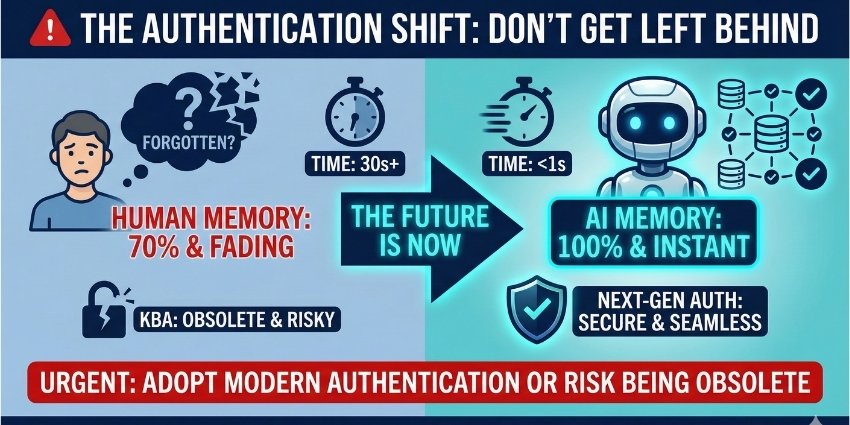

For years, KBA worked because it relied on human memory limitations. People forget details. They hesitate. They can’t instantly access every piece of personal information. But AI agents calling on behalf of customers don’t have these limitations—and that’s breaking authentication systems built for humans.

Table of Contents

- What Is Knowledge-Based Authentication (KBA)?

- The Two Types of KBA: Static and Dynamic

- Why KBA Works for Humans (And Why That’s the Problem)

- How AI Customers Expose KBA’s Fatal Flaw

- The Authentication Crisis Contact Centers Face

- What Comes After KBA: Permission-Based Authentication

- The Dual-Path Future: Humans and AI Need Different Lanes

- What Contact Centers Must Do Now

- FAQ: Knowledge-Based Authentication

What Is Knowledge-Based Authentication (KBA)?

Knowledge-based authentication (KBA) is an identity verification method used primarily in contact centers, financial services, healthcare, and customer service environments. KBA confirms a person’s identity by asking them questions based on information that should be private or difficult for others to know.

The core assumption behind KBA is simple: only the legitimate account holder can remember specific personal details such as previous addresses, loan amounts, childhood pet names, or the make and model of their first car.

KBA has been widely adopted because it’s:

- Easy to implement – No special hardware or biometric scanners required

- Familiar to customers – Most people have answered security questions before

- Cost-effective – Doesn’t require expensive infrastructure

- Reasonably effective against casual fraud – Works well when fraudsters lack detailed personal information

However, KBA’s effectiveness depends entirely on the assumption that the caller is human with human memory limitations. When that assumption breaks down, so does the security model.

The Two Types of KBA: Static and Dynamic

Knowledge-based authentication comes in two primary forms, each with different strengths and vulnerabilities.

Static KBA

Static KBA uses fixed information tied directly to your identity. These are pre-set security questions and answers that rarely change over time. Common examples include:

- Mother’s maiden name

- Date of birth

- Social Security number (last four digits)

- First pet’s name

- Street you grew up on

- High school mascot

Static KBA answers are typically stored in organizational databases or retrieved from credit bureau records. The weakness: once this information is compromised through a data breach or social engineering, it remains compromised forever because the answers don’t change.

Dynamic KBA

Dynamic KBA generates questions on the fly based on public records, credit history, or recent account activity. These questions are harder to predict and may include:

- “Which of these addresses have you lived at?”

- “What was the amount of your car loan in 2018?”

- “Which bank issued your mortgage?”

- “What was your credit card balance range last month?”

Dynamic KBA is considered more secure than static KBA because the questions change and aren’t pre-set. However, it still relies on documented information that exists somewhere in databases—information that AI agents with proper access can retrieve instantly.

Why KBA Works for Humans (And Why That’s the Problem)

KBA’s effectiveness has always been rooted in human cognitive limitations. These limitations create natural friction that helps distinguish legitimate customers from fraudsters:

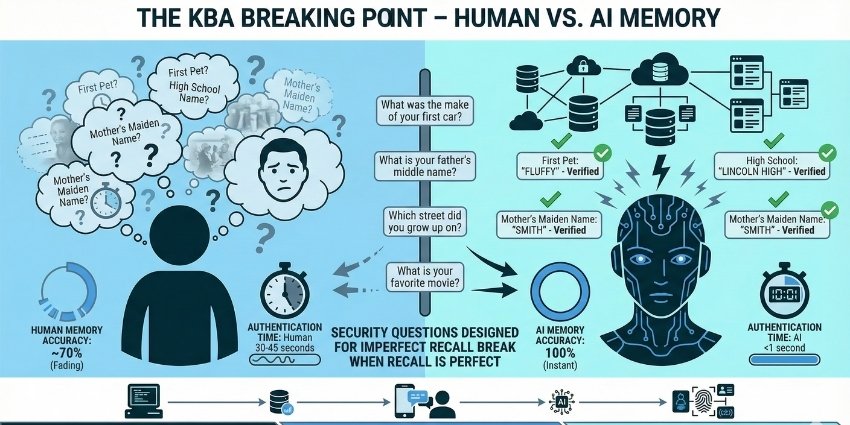

Human Memory Is Imperfect

Most people can’t instantly recall every address they’ve lived at, the exact amount of a five-year-old loan, or their childhood best friend’s middle name. This imperfection is a feature, not a bug—it makes the information harder to fake.

Humans Hesitate and Self-Correct

Natural speech patterns include pauses, uncertainty, verbal corrections, and thinking out loud. These behavioral signals help contact center agents and fraud detection systems identify suspicious activity. A caller who answers too quickly or too perfectly may trigger red flags.

Humans Have Limited Data Access

Unless you’re carrying documentation, you’re relying on memory alone during a phone call. You can’t instantly query a database or pull up your complete credit history mid-conversation.

These limitations don’t exist for AI customers. AI agents operate with database-level access to personal information. They don’t forget. They don’t hesitate. They retrieve answers with machine precision in milliseconds. When an AI calls your contact center on behalf of a legitimate customer, it passes KBA effortlessly—not through fraud, but through authorized access and perfect recall.

How AI Customers Expose KBA’s Fatal Flaw

The fundamental problem is architectural: KBA was designed around human cognitive limitations, not machine capabilities. When AI enters the conversation, those limitations disappear—and so does the security model.

1. Perfect Recall Eliminates Authentication Friction

An AI agent doesn’t need to think, remember, or look anything up. It accesses answers instantly from structured data. The security question becomes a formality, not a verification mechanism. What was designed to be difficult becomes trivial.

2. No Behavioral Signals to Detect

Human authentication includes subtle behavioral cues—hesitation, tone changes, self-correction, natural speech patterns. AI customers speak with perfect syntax, zero uncertainty, and consistent delivery. Contact center agents and fraud detection systems lose the behavioral signals they rely on to spot suspicious activity.

3. Static KBA Becomes Completely Obsolete

If an AI agent has been delegated access to an account, it has access to all static KBA answers stored in the system. Security questions don’t verify identity—they verify database access, which the AI already has by design.

4. Dynamic KBA Only Slightly Better

Dynamic questions based on credit history or transaction records are harder to predict for human fraudsters. But AI agents with proper delegation credentials can access this information just as easily as static data. The questions still don’t prove the caller is human—they only prove the caller has data access.

5. Fraud Detection Systems Trigger False Positives

Many contact centers use voice biometrics and synthetic speech detection to flag non-human callers. When a legitimate AI customer calls, these systems may reject the interaction entirely, creating friction for valid customers while failing to stop actual fraud.

Real-World Example: A major US bank recently received a call from an AI agent performing debt negotiation on behalf of a legitimate customer. The human agent had no policy for handling it, proceeded through standard KBA, and completed the transaction. Only afterward did they realize they’d authenticated and negotiated with software, not a person. There was no fraud—but there was also no way to distinguish this legitimate AI from a malicious one.

The Authentication Crisis Contact Centers Face

The challenge isn’t that AI customers are inherently fraudulent—it’s that they break the assumptions KBA relies on. This creates three immediate, interconnected problems:

Problem 1: You Can’t Tell Good AI from Bad AI

A legitimate AI agent acting on behalf of a customer looks identical to a fraudulent AI agent using stolen credentials. Both pass KBA. Both speak with machine precision. Both have database-level access to personal information. Traditional authentication cannot distinguish between them.

Problem 2: Blocking AI Means Blocking Legitimate Customers

As consumer AI agents become mainstream—through services like Google’s AI shopping assistant, OpenAI’s Operator, or personal AI delegation tools—blocking all AI traffic means rejecting valid customer interactions. Organizations that ban AI wholesale will create friction for the very customers they’re trying to serve.

Problem 3: Lowering Security Thresholds Increases Fraud Risk

If you relax authentication requirements to accommodate AI customers, you open the door to sophisticated fraud attacks. AI-powered scammers can use the same tools to bypass weakened security controls at scale.

Contact centers are caught in an impossible position: maintain strict KBA and reject legitimate AI customers, or loosen controls and expose the organization to AI-driven fraud.

What Comes After KBA: Permission-Based Authentication

The solution isn’t to abandon authentication—it’s to redesign it for a world where customers might not be human. This requires a fundamental shift from identity-based authentication (proving you are who you say you are) to permission-based authentication (proving you’re authorized to act on behalf of this account).

1. Token-Based Authentication

Instead of asking security questions, systems exchange cryptographic tokens that prove delegation authority. An AI agent presents a token that says, “I am authorized to act on behalf of Account X with permissions Y and Z.” The contact center validates the token, not the memory of the caller.

2. OAuth and Delegation Protocols

Organizations implement OAuth-style delegation frameworks where customers explicitly grant AI agents limited permissions. Example: “My AI can check my balance and pay bills, but it cannot change my address or close my account.” These permissions are cryptographically verified, not verbally confirmed.

3. Multi-Factor Machine Authentication

AI-to-AI authentication uses machine-readable credentials—API keys, digital certificates, signed requests—that prove both identity and authorization without relying on knowledge questions designed for humans.

4. Minimum Permissible Access

Systems enforce least-privilege principles, granting AI agents only the minimum access needed to complete specific tasks. Even if an AI is compromised, its damage is limited to its narrow permission scope.

5. Continuous Authentication and Anomaly Detection

Rather than a single authentication checkpoint, systems continuously monitor behavior patterns, transaction velocity, and access anomalies. If an AI agent suddenly requests unusual actions or accesses data outside its normal patterns, the system flags it for review.

The Dual-Path Future: Humans and AI Need Different Lanes

The most practical near-term solution is building parallel authentication paths—one optimized for humans, one optimized for AI customers.

The Human Lane

Retains familiar KBA, voice biometrics, and empathy-driven service. Agents engage in natural conversation, ask security questions, and provide emotional reassurance. This path assumes imperfect memory, behavioral cues, and the need for clarification.

The AI Lane

Uses token-based authentication, structured data exchange, and machine-to-machine protocols. There’s no need for security questions or small talk. The AI agent presents credentials, states its intent, executes the transaction, and disconnects. Speed and accuracy matter more than empathy.

Contact centers must detect which type of customer they’re serving and route accordingly. This requires:

- AI detection capabilities that identify non-human callers without blocking legitimate interactions

- Routing logic that directs AI customers to appropriate workflows

- Agent training that prepares staff to recognize and handle AI interactions

- Policy frameworks that define what AI agents can and cannot do

What Contact Centers Must Do Now

KBA isn’t going to disappear overnight. It still works reasonably well for human-to-human interactions, and most contact centers have years of infrastructure built around it. But the window for adaptation is closing fast.

1. Audit Your Authentication Flows

Identify every point where you rely on knowledge-based questions. Assess how vulnerable each checkpoint is to AI customers with perfect recall. Map out which interactions could be compromised and which remain secure.

2. Implement AI Detection Without Blocking Legitimate Traffic

Deploy tools that identify non-human callers, but don’t automatically reject them. Instead, route them to appropriate authentication paths. The goal is differentiation, not denial.

3. Begin Token-Based Authentication Pilots

Start small with low-risk use cases—balance inquiries, appointment scheduling, order status checks. Test OAuth delegation frameworks and machine-readable credentials before rolling them out to high-value transactions.

4. Build Cross-Functional Working Groups

Authentication redesign isn’t just an IT problem. It requires collaboration between operations, security, compliance, legal, and customer experience teams. The decisions you make will affect fraud exposure, customer satisfaction, and regulatory compliance.

5. Educate Agents and Leadership

Most contact center staff have never considered that a caller might be AI. Training must prepare agents to recognize AI interactions, understand delegation protocols, and know when to escalate. Leadership must understand the strategic implications and resource requirements.

FAQ: Knowledge-Based Authentication (KBA)

What does KBA stand for?

KBA stands for Knowledge-Based Authentication. It’s a security verification method that confirms identity by asking questions based on information only the legitimate account holder should know.

What is the difference between static KBA and dynamic KBA?

Static KBA uses pre-set security questions with fixed answers (like “mother’s maiden name”) that rarely change. Dynamic KBA generates questions on the fly based on credit history, public records, or recent transactions, making the questions harder to predict but still vulnerable to AI with database access.

Why is KBA failing against AI customers?

KBA was designed around human memory limitations—people forget, hesitate, and can’t instantly access data. AI customers have perfect recall and database-level access to personal information, so they pass KBA effortlessly. The security questions no longer verify identity; they only verify data access.

What is replacing KBA for AI customer authentication?

Organizations are shifting to permission-based authentication using cryptographic tokens, OAuth delegation protocols, and machine-to-machine credentials. Instead of proving “you are who you say you are,” the system verifies “you are authorized to act on behalf of this account with specific permissions.”

Can contact centers still use KBA for human customers?

Yes. The most practical approach is building dual authentication paths—one for humans using traditional KBA and voice biometrics, and one for AI customers using token-based authentication and delegation protocols. Contact centers must detect which type of customer they’re serving and route accordingly.

How do you detect if a caller is an AI agent?

AI detection tools analyze speech patterns, response timing, syntax precision, and behavioral signals. However, the goal isn’t to block AI customers—it’s to route them to appropriate authentication workflows. Legitimate AI agents should be served efficiently through machine-to-machine protocols, not rejected.

What are the risks of blocking all AI traffic in contact centers?

Blocking all AI creates friction for legitimate customers using personal AI assistants, Google’s AI shopping tools, or delegation services. As consumer AI adoption grows, blanket bans will result in rejected valid interactions, poor customer experience, and competitive disadvantage.

What is token-based authentication?

Token-based authentication uses cryptographic tokens instead of security questions. An AI agent presents a token proving it’s authorized to act on behalf of a specific account with defined permissions. The system validates the token’s authenticity and scope, not the caller’s memory.

The Bottom Line: KBA Wasn’t Built for This

Knowledge-based authentication served contact centers well for decades. It was simple, scalable, and reasonably effective against human fraudsters with human limitations. But it was never designed for a world where customers have perfect memory, instant data access, and machine-level precision.

AI customers aren’t breaking the rules—they’re exposing the fact that the rules were always based on assumptions about human cognition. Those assumptions no longer hold.

The question isn’t whether KBA will fail. It’s whether your organization will adapt before it does.

Contact centers that cling to knowledge-based authentication will find themselves caught between two bad options: reject legitimate AI customers and create friction, or accept them and open the door to sophisticated fraud. The organizations that thrive will be those that redesign authentication for a world where the customer on the other end of the line might not be human—and that’s okay, as long as they’re authorized.

The era of “What was your mother’s maiden name?” is ending. The era of “Present your delegation token” is just beginning.

Is your contact center ready for what comes next?