The contact center and customer experience landscape has entered into a period of rapid development and innovation, driven largely by AI innovation. Advanced AI solutions, from generative AI chatbots, to advanced speech analytics tools are transforming how companies and customers interact.

Used correctly, AI has the power to reduce operational costs, improve agent productivity, and even enhance customer service with more personalized, data-driven support. Unfortunately, there’s a dark side to AI in the contact center too.

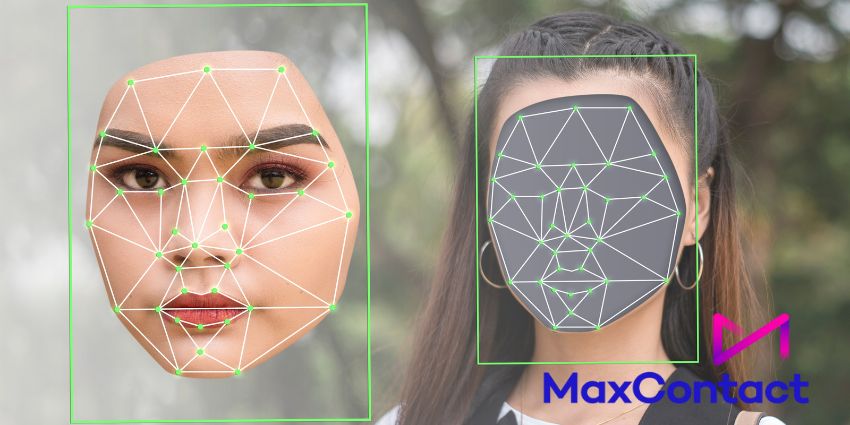

Just as companies can use AI to generate content and call scripts, bad actors can leverage the same tools to commit fraud, and steal private information. Deepfakes, created to synthesize and replicate human voice can trick IVR systems and traditional authentication methods with relative ease.

The problem is becoming increasingly evident, to the point that OpenAI even stalled the release of its voice cloning tool, and advised contact centers to phase out voice-based authentication as a security measure. So, how significant is the threat of deepfakes, and what can your company do about it?

The Evolving Threat of Deepfakes in Contact Centers

The growing power of AI has led to increasing concerns among customers, regulatory bodies, and companies alike. Ever since generative AI stepped into the spotlight, the concept of deepfakes has become more commonplace.

Originally, consumers associated “deepfakes” with counterfeit celebrity photos and videos. However, now, we’re seeing rising evidence that AI can also be used to synthesize a person’s voice, with an alarming level of accuracy. Not only does OpenAI plan to release a solution specifically designed for this purpose, but many other organizations have been experimenting with conversational AI bots that can interact with contact centers on the behalf of customers.

While this might seem like a good thing for customers, who want to avoid long call queues and conversations, it’s a serious worry for contact centers, and directly contradicts the concept of “ethical AI”, opening the door to new ways for criminals to use AI to harm consumers.

If consumers can use bots to replicate their voice, there’s nothing stopping criminals from doing the same. While followers of ethical AI standards, (such as OpenAI), can release statements highlighting the dangers of their tools, it’s up to business leaders and contact centers to proactively address the threat. That starts with understanding the patterns appearing in deepfake fraud.

“Safeguarding customer data and trust is paramount for contact centers as deepfake threats emerge. By prioritizing ethical AI practices and rigorous security protocols, businesses can drive innovation while shielding both the customer experience and their reputation.” – Ben Booth, CEO, MaxContact

How Criminals Use Deepfakes in Contact Centers

While AI-powered voice authentication tools can streamline the process of validating customers in a contact center, many struggle to differentiate between real “live” human voices, and bots trained to replicate a person’s voice. Pindrop recently released a report highlighting the numerous ways criminals are already using deepfakes in the contact center. For instance bad actors are:

- Using synthetic voices to bypass IVR authentication: With highly accurate machine-generated voices, it’s relatively easy to bypass an automated IVR authentication system, particularly if criminals already have the answers to a user’s security questions. Bots can be used to quickly define which accounts are worth targeting, based on finance and balance-based inquiries before criminals take over.

- Changing profile data with AI: Criminals can also use synthetic voices to submit requests asking to change their profile data, such as their email address or preferred phone number. This is usually the first step a bad actor takes when they want to receive a one-time password from a company or ask for a new bank card to be delivered to an updated address.

- Mimicking bank IVRs: With intelligent tools, criminals can teach bots to mimic bank IVRs, getting them to call into a contact center to collect data about the prompts used. This essentially gives criminals a way to create a highly accurate-sounding phone system, designed to trick customers into sharing valuable data.

- Collecting valuable data: Notably, synthetic voices aren’t always used specifically to bypass authentication methods. Many fraudsters may use a synthetic voice to gather data about accounts, learn about how IVR systems work, and collect the insights they need to further trick unsuspecting customers.

How Can Contact Centers Prepare?

The unfortunate truth is that deepfakes are already here, posing a significant threat to contact centers, and their customers. Business leaders can’t simply wait for AI innovators to adhere to ethical AI guidelines, or detect criminal users themselves. Today’s contact centers need to ensure their authentication system is prepared to defend against deepfakes.

For many contact centers, the path to success starts with using ethical AI practices themselves. Contact centers investing in ethical AI strategies work with vendors to ensure data is stored safely, and used correctly, they also regularly review their AI strategies, to protect consumers.

Though AI might be the cause of the deepfake issue, it can also help to deliver a solution. Innovative speech analytics tools using AI can go beyond simply matching a voice to a recording. They can examine sentiment, and determine the difference between live and recorded voice. MaxContact’s speech analytics solution can even detect “vulnerable” customers in real-time, based on their language, tone, and the words they use.

Beyond fighting fire with fire, by using AI to detect potentially fraudulent calls, contact centers can implement more robust, layered security plans, using strategies like:

Agent Education

Human agents will always be necessary in the contact center. Not only do they deliver empathy, creativity, and compassion during conversations, but they can be more effective than bots at differentiating between fake, and live voices. Coaching employees on how to detect synthesized voices, and guiding them on what they can do if they detect an issue will be crucial.

Notably, providing access to speech analytical tools that can assist agents with synthetic voice detection can be useful too, giving them real-time insights into sentiment and vulnerability.

Utilizing Callbacks

If a caller’s voice sounds suspicious, or a company’s reporting and analytical tool flags inconsistencies during a conversation, conducting a callback can help to mitigate threats. It gives agents a chance to end a call, and then reach out to the customer using the contact details they already have on record, to ensure they’re actually speaking to the account owner.

Recording contact center data constantly, and tracking evidence of potentially “risky” customers in real-time should help agents to determine when a callback may be necessary.

Advanced Multi-factor Authentication

Although it’s possible for deepfakes to bypass multi-factor authentication solutions, it’s often much harder to bypass various different authentication methods. Rather than just assessing a customer based on their voice, use one-time passwords, combined with keypress analysis for behavior detection, or digital tone analysis for device detection.

A comprehensive multi-factor authentication process that uses various factors to determine authentication eligibility will reduce the risk posed by deepfakes.

“The rise of deepfakes poses a severe threat, but speech analytics equips contact centers with a powerful countermeasure. By analyzing vocal patterns, emotional cues, and biometrics, these AI-driven solutions can distinguish synthetic voices from genuine customers. As deepfake capabilities evolve, investing in speech analytics becomes crucial for protecting customers and mitigating fraud.” – Matthew Yates, VP of Engineering, MaxContact.

Deepfakes are Here: Are You Ready?

Unfortunately, the idea of bots replicating humans for fraudulent actions isn’t just a futuristic concept anymore, it’s a reality. Criminals are already leveraging generative AI tools to synthetize human voices, bypass IVR systems, and even trick customers and live agents.

To stay secure in this landscape, contact centers need to embrace ethical AI strategies, and a comprehensive, multi-faceted approach to customer authentication. Failure to do so could put your company at significant risk of regulatory fines, reputational damage, and data theft.