After teasing Einstein GPT, Salesforce put a stake in the ground for what it means to take generative AI and bring it to CRM.

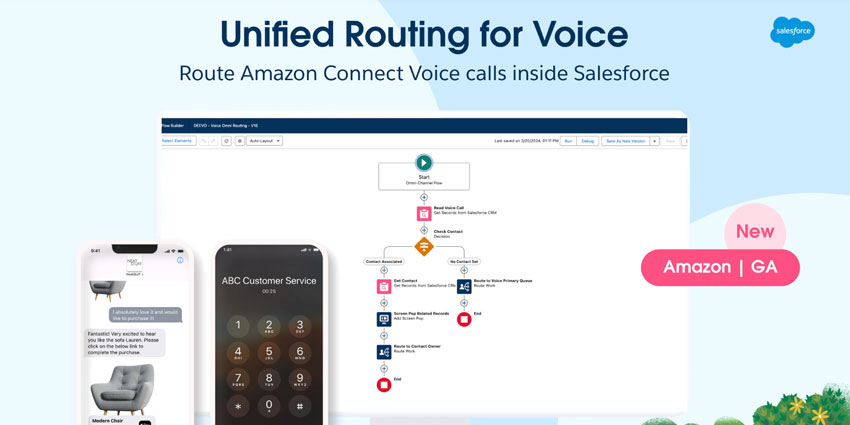

One such CRM is its Service Cloud platform, which contains several contact center tools.

Einstein AI already powers many of these, including self-service, routing, and agent-assist tools. Yet, Einstein GPT will leverage that platform to push innovation further, first enhancing agent-assist.

To understand how, consider the current agent-assist tools within Service Cloud work. They listen to customer conversations, predict which knowledge article or piece of CRM data may prove helpful, and surface it for agents. The rep may then uses that knowledge to inform their response.

With Einstein GPT, the Service Cloud will auto-generate the entire response for agents, which they can review, approve, and publish.

Yet, that is not the only use case. Einstein GPT also filters through successful customer conversation transcripts and auto-generates knowledge articles for evaluation.

In addition, it auto-generates outbound emails for teams, which they can review, tweak, and send.

Expect more Service Cloud innovations, which harness the power of OpenAI’s large language models (LLM) and keep a human-in-the-loop.

“By keeping the human-in-the-loop, contact centers can send customers a trusted response and train the model to get better and better,” said Ryan Nichols, SVP and GM of Contact Center at Salesforce, in conversation with CX Today.

Such trust is seemingly a critical part of Salesforce’s Einstein GPT mission, and Nichols underlines it as one of three mission-critical principles of LLM-based contact center innovation.

Three Principles for LLM-Based Contact Center Innovation

Most contact centers will not be thinking of how to build GPT applications into their workflows. Instead, many are engaging with providers to uncover how they plan to innovate with generative AI.

As they engage, Nichols believes that they should hope their vendor:

- Deploys LLMs in a way that safeguards customer trust.

- Grounds their generative AI in the right data.

- Considers the entire generative AI ecosystem.

Prioritizing human-in-the-loop innovation is one way to safeguard trust. After all, for all their plus points, LLMs are not grounded in any definitive source of truth. As such, they are often wrong.

The challenge for vendors is to combine the generative capabilities of these models and ground them in customer data. “When you do that, you can use the models in powerful ways,” adds Nichols.

However, while it is critical for CX vendors to prepare for this future, such use cases are not ready for enterprise deployment. As Nichols states:

I love ChatGPT, but it’s not ready to be unleashed on your customers without something in the middle.

Next, Nichols recommends that vendors think beyond ChatGPT. After all, there are lots of LLMs out there, and many more will come. Some will be industry-specific, and others will be function-specific.

Salesforce is preparing for this reality and is not putting all its chips in the Chat-GPT basket. As Nichols revealed:

A core part of the Einstein GPT architecture is a large language model gateway. That is going to help you bring the right models to the platform.

Already, Salesforce has announced a $250MN fund to invest in start-ups and innovate in this area. This will help create a rich ecosystem that will push generative AI forward.

To learn more about its generative AI strategy, check out our entire interview with Nichols below.