A lot of people in life will try to convince you that bigger is better.

The realtor, the car salesman, the travel agent, even your server at McDonald’s trying to get you to go for the large fries and drink.

And while sometimes it can be true, it’s not the hard and fast rule that some would like you to believe.

Be honest, which concert did you prefer? The arena show where you were stuck up in the stands watching it on a big screen because you could only just make out the people on stage? Or that time you managed to catch your favorite band in a tiny backroom somewhere before they made it big?

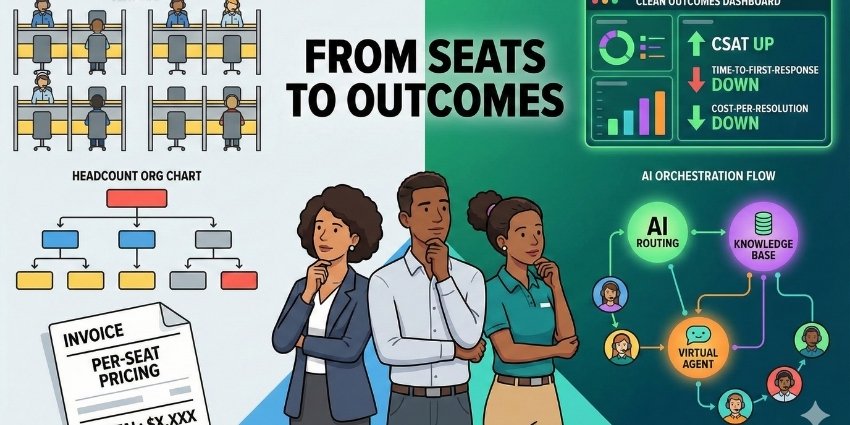

When it comes to the customer service and experience space, many of the major vendors have traditionally been in the ‘bigger is better’ camp; more specifically, they have pushed the narrative that larger AI models translate to superior outcomes.

Giant LLMs promised to reinvent customer service, automate complex workflows, and elevate the entire contact center. Yet many scaling enterprises have found themselves stuck in lengthy deployments, rising costs, and unpredictable results.

According to James Scott, Senior Solutions Engineer at Diabolocom, the inflection point often comes only after real-world testing:

“The big moment is when people realize that hefty, large general-purpose models are not the best for customer experience workflows.”

Teams begin to see quality issues, usability challenges, or unexpected cost spikes. And those frustrations lead to a simple but vital question: do large models really serve the needs of CX operations?

Increasingly, the evidence says no.

Why Bigger Isn’t Better in CX

For Scott, frontline environments rely on precision. They require fast, clear, task-specific decisions, classification, routing, QA scoring, call summarization, and compliance checks. Large general models, he explains, simply aren’t optimized for that reality.

“They’re always trying to be a jack of all trades and a master of none,” he says. “In CX, we want models to do very specific things, and we want them to do those specific things very well.”

One of the most visible consequences is hallucination. Scott puts it bluntly: “Large models tend to hallucinate because there is so much data they can pull on, and because they’ve been told to always give an answer – even if that answer isn’t something it’s 100% confident in.”

The result is an accuracy and accountability gap. Leaders buy into ambitious pre-sales visions, only to hit issues once the system must perform inside a live operation.

As Scott describes it, implementation becomes the moment when “you have to cash those checks… and they don’t quite cash.”

This is where Diabolocom takes a different approach. Rather than layering CX workflows on top of general-purpose LLMs, the company builds its own smaller, purpose-built AI models designed specifically for contact center use cases.

These models aren’t wrappers. They’re trained for defined workflows on customer-relevant data, and packaged for real operational deployment.

The Case for Specific: Faster Deployment, Faster ROI

One of the most compelling advantages of domain-specific AI is speed to value.

Diabolocom reports deployment timelines measured in weeks, not quarters.

“Smaller models are easier to operationalize,” Scott explains. With tighter, curated datasets and a focused purpose, they require far less training and tuning.

“It’s easier to compile the training data, easier to understand, and easier to get that model to a place where it’s doing what it needs to do.”

He compares it to onboarding an experienced employee. “It’s like getting somebody who has worked in an industry for a very long time and training them, versus getting someone who’s a quick learner but has never worked in that industry.

“You would always prefer someone with previous experience.”

This familiarity dramatically reduces testing time and shrinks the burden on engineering teams. Enterprises also benefit from lower compute costs and reduced infrastructure demands; an increasingly important factor as AI budgets come under scrutiny.

Why Orchestration Alone Won’t Fix It

The market is now full of AI orchestration platforms promising a single layer over massive LLMs. Yet Scott cautions that many are struggling to deliver practical ROI.

“A lot of orchestration layers, especially when they’re working with larger models, tend to just be reverse engineering the responses they get,” he says.

He explains that when the underlying models are black boxes, orchestration platforms often guess at intent or meaning rather than leveraging transparent, explainable decisions.

Their appeal is understandable. Large organizations run sprawling tech stacks, and “it’s very appealing to have a solution that claims to clean all of that up.”

But Scott emphasizes that no orchestration layer can overcome the fundamental mismatch between generic models and CX-specific tasks.

Smaller models, by contrast, are more interpretable and therefore far easier to orchestrate.

“It’s not reverse engineering,” he says. “It’s actually understanding how these models work and accommodating the orchestration to that.”

Measurable Outcomes: Cost, Accuracy, and Operational Lift

Diabolocom’s own results reinforce this shift. While Scott couldn’t share detailed client metrics in the interview, he described significant improvements across cost, accuracy, and implementation time.

“Working with our models costs less because it’s a smaller model with a smaller dataset,” he says.

“It’s more reliable because that dataset was tailored. And it’s easier to get stakeholders to a place where they understand how the model works and what it’s meant to accomplish.”

That clarity extends all the way to the front line. Teams learn faster, trust the outputs more readily, and avoid the complexity traps often associated with large-model deployments.

A Smaller Model Also Means a Smaller Risk Profile

As hallucinations and compliance risks become board-level concerns, specialized models offer a practical way to reduce uncertainty.

“Large models are incentivized to give an answer no matter what,” Scott explains. Their broad consumer use cases force them to cover every conceivable scenario, creating unpredictable behavior in controlled enterprise settings.

Smaller models behave differently. “If you ask it a question that it’s not designed to answer, it will not hallucinate,” he says. “It will not give you an answer that it’s not completely confident in.”

This means that smaller models are able to deliver greater explainability, lower risk, and more dependable outputs for regulated industries.

Where Large Models Still Shine

While he clearly champions smaller models, Scott still admits that generic models have their place.

Most notably, he sees larger models as effective for everyday consumer tasks, where they provide accessible, broad functionality.

He compares it to choosing between Windows and Linux: if you want to answer emails, do some web browsing, and work on documents, Windows is perfect; if you want to start coding and work on more complex tasks, you’ll need Linux.

So when it comes to CX, he firmly believes that organizations are “going to want to go with the smaller models.”

They deliver deeper precision, more predictable behavior, and “do the specific things better than a large model ever could.”

As scaling enterprises look for AI that is fast, safe, and undeniably effective, the case for specialized models will continue to grow. It will be fascinating to see how the CX space will react.

You can find out more about Diabolocom’s approach to specialized AI by checking out this article.

Want to go further? Discover Diabolocom’s Practical Playbook on Specialized AI: secure, sustainable, and purpose-built for contact center operations.