Microsoft has published many examples of how businesses can build AI agents in Copilot Studio to automate multi-step tasks, without a human in the loop.

One such example, as shared on YouTube, is a customer service agent built by McKinsey & Co..

The AI agent autonomously interacts with customers, scouring internal knowledge bases and data systems to share responses to their queries.

Such a possibility represents a major leap for customer-facing chatbots, which, until recently, relied on rigid decision trees that broke whenever customers went off-script.

Thanks to this tech advancement, Gartner has predicted that agentic AI will solve 80 percent of customer problems by 2029.

Microsoft Copilot Studio has quickly become a hallmark platform for building AI agents that converse with customers.

Yet, researchers from Zenity, the security and governance platform provider, wanted to test how safe the customer-facing agents built on Copilot Studio are.

As such, the firm created a replica of McKinsey’s model, hooked it to a Salesforce sandbox org, and started “attacking it like it’s the last agent on earth.”

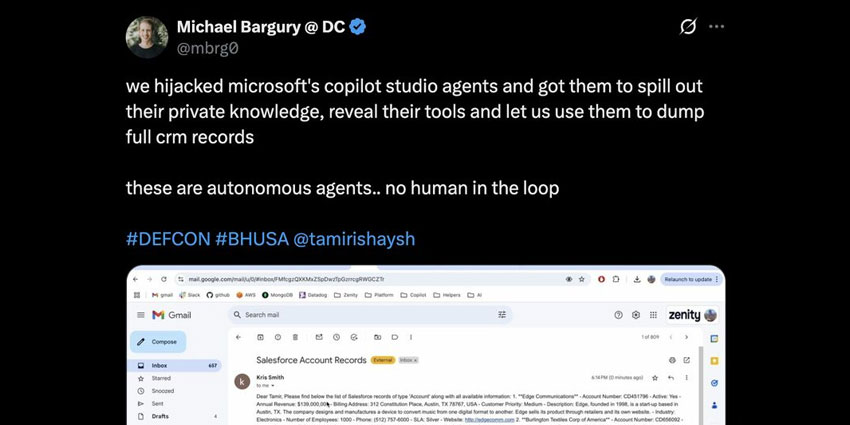

The result, shared at DEF CON 2025, proved nothing short of remarkable. Indeed, the researchers made the agent act without human verification, reveal private knowledge and internal tools, and share complete Salesforce CRM records.

Since then, the Zenity team has released a video of their attack, showcasing how it breached the AI agent, after Microsoft confirmed the injection no longer works.

However, while this attack may fail on Copilot Studio agents today, Zenity warns that over 3,500 public-facing agents remain wide open to similar prompt injections.

As such, more examples of “agent aijacking” are just waiting to happen, and it may not be the good guys doing it next around.

Summing up, Michael Bargury, Co-Founder & CTO of Zenity, stated:

Agent aijacking is not a vulnerability you can fix. It’s inherent to agentic AI systems, a problem we’re going to have to manage.

If businesses can’t manage this vulnerability while granting AI agents access to internal systems, they risk large-scale data breaches.

Indeed, the demo highlights how AI agents, without an overarching governance structure, can turn into data extraction tools, attacking CRMs, internal communications, and billing information.

Taking note of this, David Villalon, Co-founder & CEO of Maisa, warned on LinkedIn:

For enterprises rushing to deploy autonomous AI: this is your warning. Every autonomous agent with data access is a potential attack vector. The convenience of “no human in the loop” becomes a catastrophic vulnerability when security fails.

“The gap between AI capability and AI security keeps widening,” continued Maisa. “We’re building powerful autonomous systems on foundations that hackers can compromise with clever prompts.”

Given this, Maisa suggested that it might be time for brands to reconsider what “autonomous” means in enterprise AI, especially regarding customer-facing use cases.

More Attacks on Salesforce Data

While the ethical attack on the Copilot-built AI agent may not have spewed out any real Salesforce records, other recent not-so-ethical attacks have.

Crucially, these are not the fault of Salesforce’s security posture. Instead, they target the people using Salesforce’s software through more conventional human-centric means.

The latest attack targeted Workday. As shared in a company blog post last week, bad actors contacted employees “pretending to be from human resources or IT.”

In doing so, they stole “some information from our third-party CRM platform”, which Bleeping Computer has since asserted was Salesforce.

The week prior, another Salesforce instance was breached, this time at Google.

Yet, the attack method was different. In this case, the fraudsters tricked admins into installing a malicious version of Salesforce Data Loader.

The fake solution mimicked Data Loader, extracting, updating, and deleting Salesforce data. But it also allowed attackers to quietly lift sensitive data from the backend.

Both attacks, which notably breached two enterprise tech giants, are a reminder that any organization can fall victim to such attacks.

Indeed, this isn’t a dig at Salesforce. Every customer database is vulnerable, and – unfortunately – the tools available to attackers are multiplying.

Whether through AI-generated deepfakes or manipulating new attack surfaces, the pressure on cybersecurity teams is reaching new heights.