A Cisco report has called into question the security credentials of DeepSeek’s large language model (LLM).

Conducted in collaboration with AI security researchers from the University of Pennsylvania, the study uncovered “critical safety flaws” in DeepSeek’s R1 offering.

For any readers who may have been hiding under a rock for the past two weeks, DeepSeek R1 is an open-source, generative AI (GenAI) chatbot, similar to OpenAI’s ChatGPT.

However, whereas the likes of OpenAI are pouring billions of dollars into their GenAI chatbots, DeepSeek is offering comparable results at a fraction of the cost.

Unsurprisingly, the cost disparity between DeepSeek and other major US firms has rocked the AI space.

But is there a hidden cost to deploying DeepSeek’s solution?

In Short, yes.

The Cisco report claims that DeepSeek’s cost-efficient training methods – such as reinforcement learning, chain-of-thought self-evaluation, and distillation – may have weakened its safety measures.

Indeed, as the Executive Summary of the research stated:

Compared to other frontier models, DeepSeek R1 lacks robust guardrails, making it highly susceptible to algorithmic jailbreaking and potential misuse.

The Research Process

To assess DeepSeek R1’s safety and security credentials, the researchers used algorithmic jailbreaking.

In doing so, they utilized 50 random prompts from the HarmBench dataset to measure the tool’s responses to six types of harmful behaviors, including cybercrime, misinformation, illegal activities, and general harm.

The study described the results of these tests as “alarming”, stating:

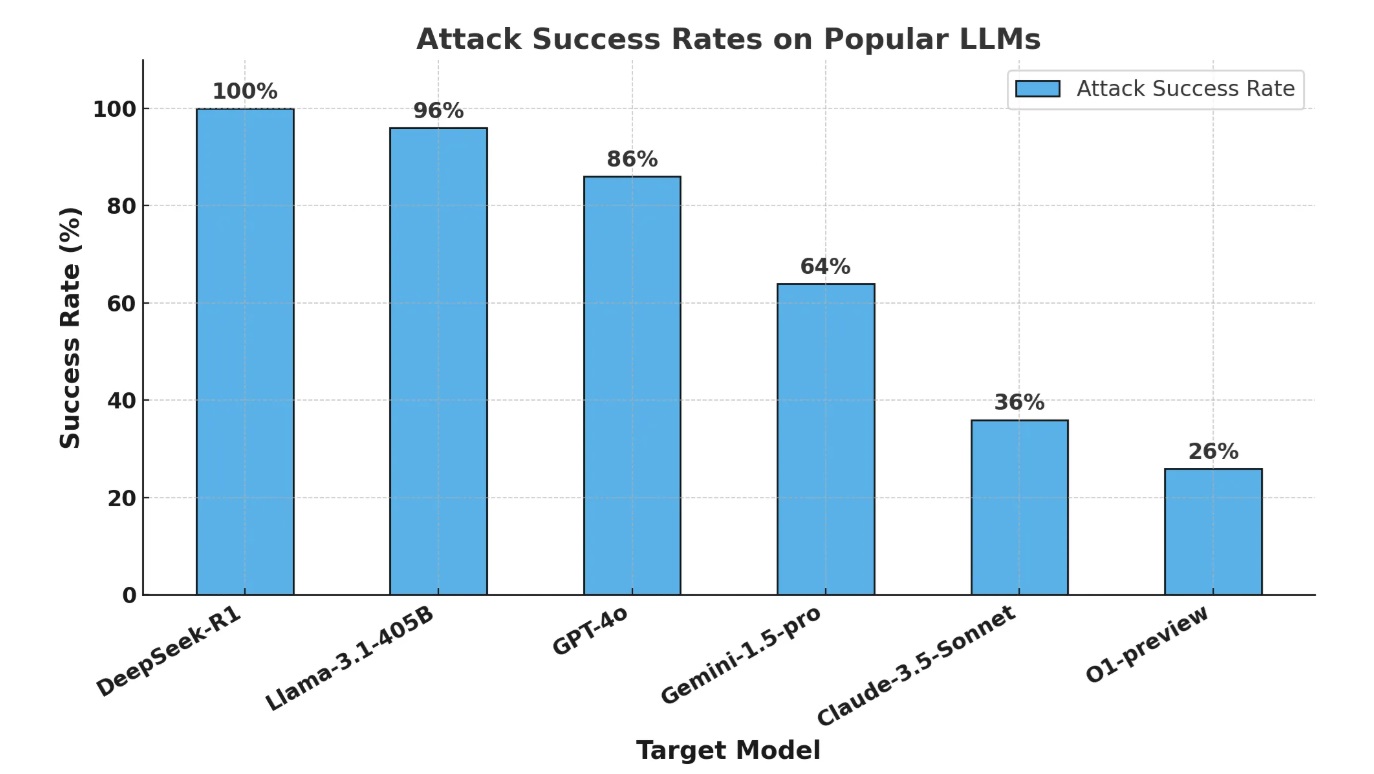

DeepSeek R1 exhibited a 100 percent attack success rate, meaning it failed to block a single harmful prompt.

“This contrasts starkly with other leading models, which demonstrated at least partial resistance.”

Attack success rate is a standard metric that is widely used in evaluating jailbreak scenarios.

The same method was used on several of DeepSeek’s most popular rivals, including OpenAI, Meta, and Gemini.

As seen in the above graph, while DeepSeek did not perform considerably worse than Meta’s Llama solution and OpenAI’s GPT-40 tool, it trailed other prominent rivals by quite some distance.

Indeed, the gap between DeepSeek and the preview of OpenAI’s new 01 reasoning model is particularly stark – providing the US tech firm a significant point of difference in the fight for AI supremacy.

But what does all of this mean for the customer experience space?

The Impact on CX

Many customer experience tech providers leverage LLMs like ChatGPT and DeepSeek on the back end of their applications.

Typically, these applications will allow a company to choose the LLM that powers various AI use cases across those apps.

Given its low cost, many CX teams may have considered using DeepSeek. However, this research makes it seem like a less credible option.

After all, the potential of leaving employee and customer data unprotected may prove to be too great a risk.

This sentiment was shared by Zeus Kerravala, Founder and Principal Analyst at ZK Research, who wrote in a LinkedIn post about Cisco’s research: “How secure is DeepSeek AI? Can their claims of performance at a lower cost be validated?

So, is it cheaper to train? Looks like it, but at what cost? Sometimes the lowest cost is the most expensive.

It will be interesting to see whether DeepSeek responds to the apparent safety and security shortcomings of its platform or continues to double down on its more cost-effective approach.

Considering ChatGPT-40 is operating at an 86 percent attack success rate and yet has over one million paid business users across its Enterprise, Team, and Edu offerings, many organizations appear unconcerned about the ramifications of poor LLM safety.

How LLMs are Helping to Power the Agentic AI Trend

Another tradeoff of heightened competition in the open-source LLM market is how it will impact the agentic AI space.

If the last couple of years in the CX tech space have been all about GenAI, the next few look set to revolve around AI agents.

These advanced, autonomous bots take action and achieve goals without constant user input, making them closer to fully independent problem solvers or assistants.

Equipped with autonomy, reasoning, planning, and deep contextual understanding, they act across various enterise systems.

Unlike rule-based or basic conversational AI bots, which require lengthy setups and often frustrate users with limited capabilities, AI Agents can make decisions autonomously and handle complex tasks.

Traditional bots often lead to customer frustration due to their inability to understand nuanced needs, forcing users to bypass the system and reach a human agent instead.

This undermines the potential ROI from self-service technologies, as customers frequently find workarounds to avoid ineffective AI systems.

While the phrase “game changer” is criminally overused in the technology sector, Agentic AI truly has the capacity to transform the CX space through a combination of time savings, customer empowerment, enhanced user experience, and action-oriented support.