Global experts and tech industry leaders are increasingly uneasy about how fast AI adoption is moving and the growing list of risks that come with it, from sophisticated misuse to hard-to-solve engineering and governance problems.

The International AI Safety Report 2026 and Microsoft’s updated Secure Development Lifecycle (SDL) framework for AI released this week emphasize a growing consensus that traditional risk-management frameworks need to evolve to keep up with technological change.

AI is showing up everywhere customers interact with companies, from search and support to payments, recommendations, and automated decision-making. But as adoption accelerates, many of the biggest risks also show up directly in customer experience.

How AI System Complexity Risks Customer Data

The International AI Safety Report 2026, a global scientific assessment written by more than 100 experts, found that AI systems are becoming more capable at high-level tasks while remaining unpredictable in everyday use. That inconsistency matters most at the customer interface, where errors, hallucinations, and misuse quickly translate into frustration, confusion, or loss of trust.

The report notes that performance remains “jagged,” with advanced systems excelling in complex benchmarks but still failing in simple or routine interactions. For customers, that gap can look like an assistant that sounds confident but gives the wrong answer, or an automated agent that handles edge cases poorly while moving fast on everything else.

In parallel with these global scientific assessments, tech industry leaders are also transforming internal practices to address AI’s security challenges.

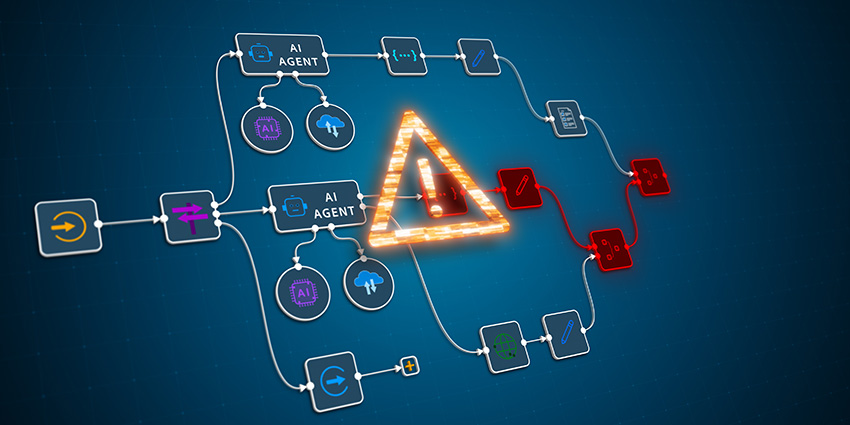

Customer experience risks are amplified by how deeply AI systems now blend data sources, tools, and memory.

Microsoft’s Deputy Chief Information Security Officer, Yonatan Zunger explained in a blog post that AI security goes “far beyond traditional cybersecurity,” because these systems collapse trust boundaries, pulling together structured data, unstructured content, APIs, plugins, and agents into a single experience layer.

From a customer experience perspective, that integration powers personalization and responsiveness but it also increases the chance that sensitive data leaks into places customers don’t expect.

AI systems accept inputs that traditional software never had to handle, including free-form prompts, retrieved content and conversational context that persists over time. Zunger cautions that this can create customer-visible failures that are hard to explain after the fact:

“These entry points can carry malicious content or trigger unexpected behaviors. Vulnerabilities hide within probabilistic decision loops, dynamic memory states, and retrieval pathways, making outputs harder to predict and secure. Traditional threat models fail to account for AI-specific attack vectors such as prompt injection, data poisoning, and malicious tool interactions.”

Temporary memory and caching, designed to make AI feel more helpful, introduce another risk, as customers may not know what the system remembers, how long it remembers it, or where that information is reused.

As the International AI Safety Report notes:

“Understanding these risks, including their mechanisms, severity, and likelihood, is essential for effective risk management and governance.”

Risks broadly fall into three categories: misuse, where AI systems are deliberately used to cause harm; malfunctions where AI systems fail or behave in unexpected and harmful ways; and systemic risks that arise from widespread deployment across society and the economy.

Why Fast-Moving AI Automation Can Undermine CX

Speed is another recurring theme across both reports. AI systems evolve faster than traditional digital products, with frequent model updates, new tools, and changing agent behavior.

The International AI Safety Report warns that the rapid pace of AI deployment creates sociotechnical risks, particularly when how people use these systems fails to keep up with how quickly they are rolled out.

For customers, that gap often shows up as shifting behavior without warning: an assistant that suddenly responds differently, a workflow that changes overnight, or guardrails that appear or disappear. Even when updates improve capability, sudden changes can erode confidence, especially in high-stakes contexts like finance, healthcare, or customer support escalation.

Microsoft echoes this concern, noting that AI’s non-deterministic behavior challenges conventional expectations around consistency and predictability.

“Security policy falls short of addressing real-world cyberthreats when it is treated as a list of requirements to be mechanically checked off. AI systems—because of their non-determinism—are much more flexible that non-AI systems. That flexibility is part of their value proposition, but it also creates challenges when developing security requirements for AI systems.”

Enterprises can no longer rely on static scripts or fixed QA assumptions. Customers experience AI systems as dynamic interfaces and they notice when those interfaces behave unexpectedly.

Trust Breaks First at the Front Door

As enterprises push AI further into customer-facing roles, trust is easiest to lose at the point of interaction. The International AI Safety Report highlights misuse risks such as fraud, manipulation, and deceptive content, which customers often encounter before organizations detect them internally.

Deepfakes, automated scams, and impersonation attacks not only harm victims, they damage brand credibility for any platform involved.

Microsoft’s updated Secure Development Lifecycle for AI reframes security as a way of working rather than a checklist, arguing that real protection depends on cross-functional collaboration that includes product design and user experience. Many AI risks originate in how customers are allowed to interact with a model, what assumptions the system makes about user intent, and how clearly boundaries are communicated.

The takeaway from both reports is that customer experience is no longer downstream from AI safety and security. Confusing outputs, inappropriate responses, and unexpected behavior can be signs of deeper issues with data integrity, access control, or system design.