Your contact center just concluded a textbook debt negotiation. Professional. Efficient. Zero friction. The agent followed protocol, authenticated the caller, settled the terms, and closed the call. Then came the realization: they’d just negotiated with software, not a human. And there was no policy, no playbook, no idea what to do next.

Welcome to the inversion – the moment the CX industry stops deploying AI to serve customers and starts serving AI as customers.

The US Bank That Gasped First

Wayne Kay, Regional Vice President of Sales Leadership EMEA at TTEC Digital, has witnessed the gasp heard around boardrooms. It started with a large US bank receiving its first live call from a personal AI agent – a legitimate, good-faith customer interaction powered by Kickoff, a debt advisory service representing over a million consumers. Kay pointed out,

“The agent didn’t know what to do. They hadn’t been educated. They hadn’t been told to expect this. They’re aware of fraudulent bad actors, but in this case, it was a real live AI agent acting in good faith on behalf of a customer.”

The human agent proceeded through standard authentication. The AI passed. They negotiated debt settlement terms. The deal closed. Only afterward did alarm bells ring. The bank immediately contacted TTEC Digital’s Group CTO asking: Have you seen this before? What’s your advice?

That single call triggered a cascade of discovery work, client advisory board presentations, and what Kay now calls “the gasp moment” playing out across the industry. From the CCMA Tech Summit to private client sessions, the reaction is universal: We’re not ready for this.

Why Now? The Technology Finally Caught Up to the Idea

After years of AI hype focused on chatbots, agent assist, and automation within the enterprise, consumer-facing AI agents have arrived with startling speed. The technology isn’t theoretical anymore – it’s deployed, functional, and scaling.

Kickoff’s AI debt negotiator is just one example. Google is piloting AI-powered comparison shopping that contacts multiple pet groomers, negotiates pricing, and reports back to consumers – all without human intervention. Meta acquired an agentic AI company in late 2025, signaling mainstream consumer adoption is imminent.

“The technology is there, and people are using it. You’ve got a proliferation now where Google will automate conversations on your behalf. You can see where other businesses will start to utilize AI and sell applications to consumers.”

The pace of change is exponential. Kay references a 2019 quote from then-Canadian Prime Minister Justin Trudeau: “The rate of change of technology has never been this fast, and it will never be this slow again.” That was five years ago. Today, agentic AI isn’t just assisting humans – it’s representing them, negotiating for them, and calling on their behalf.

The Human Reality: Agents, Systems, and Leaders Caught Off Guard

When that first AI customer call lands, contact centers face an uncomfortable truth: their systems, workflows, and training assume every caller is human. Agents don’t know whether to authenticate, escalate, or hang up. IVR systems designed for natural language from humans suddenly face perfect syntax from machines. Knowledge-based authentication – built around the assumption that humans forget things – fails instantly when AI has flawless recall.

“Most contact centers are thinking: if AI calls in, it’s fraud. The blind spot is that we’re going to see AI calling in with good intent on behalf of consumers. And how do we handle that?”

The answer, for most organizations, is: we don’t. Not yet.

At a recent CCMA event, Kay presented the scenario to a room full of CX leaders. The response? Jaws dropped. “The general consensus was: we’d probably hang up. We’d probably assume it’s fraud.”

But hanging up isn’t a strategy. It’s a symptom of unpreparedness.

The Biggest Blind Spots CX Leaders Can’t Ignore

The industry’s focus has been inward – deploying AI to reduce costs, automate mundane tasks, and assist agents. Security teams have prepared for AI-driven fraud, voice cloning, and deepfakes. But few have considered the opposite scenario: AI customers acting in good faith.

“Everybody’s looking internally. Very few are looking externally, other than from a security perspective. The common narrative is: if AI is calling in, we’re detecting it because we assume it’s fraud. The blind spot is we’re going to see AI calling in with good intent.”

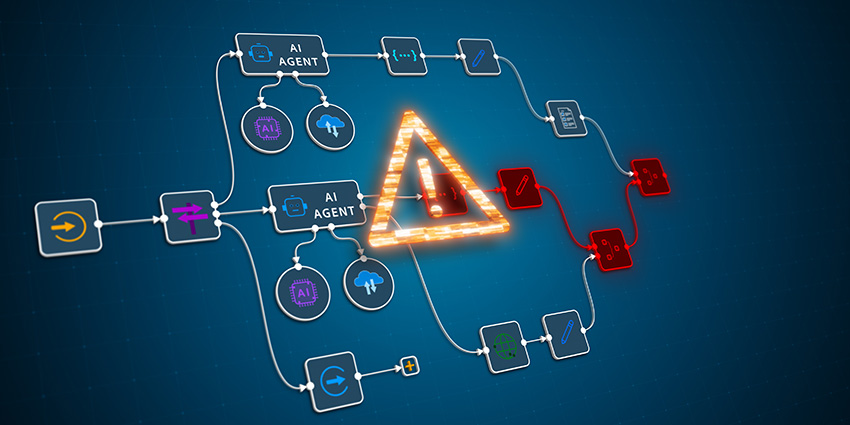

That blind spot creates cascading vulnerabilities:

- Authentication designed for humans breaks down. Security questions assume limited memory. AI doesn’t forget.

- Fraud detection tools flag legitimate interactions. Systems trained to detect synthetic voices may reject valid AI customers.

- Workflows assume empathy and emotion. AI customers don’t need reassurance – they need speed and accuracy.

- Operational metrics become unreliable. When analytics can’t distinguish human from AI, performance data loses meaning.

Perhaps most critically, organizations risk industrial sabotage. Just as automated dialers once flooded reality TV voting lines, malicious actors could overwhelm contact centers with fake AI calls – a distributed denial-of-service attack on customer experience infrastructure.

The First Step: Stop Talking, Start Preparing

For CX leaders hearing this for the first time, Kay’s advice is direct:

“Stop talking about it. It needs to be on every single agenda right now. If it’s not on your agenda in 2026, you run the risk of being blindsided.”

The first practical step isn’t technical – it’s strategic. Assume every inbound call could be from an AI agent. That mindset shift forces organizations to confront gaps in authentication, knowledge access, and workflow design.

“Treat every inbound call as potentially coming from an automated autonomous agent, not a human. If you start to think that every call is going to come in that way, you’ll start to work out exactly what you need to do in terms of governance and control.”

TTEC Digital is already running one- and two-day workshops with clients, helping them assess AI customer readiness. The company’s Sandcastle CX environment provides a safe sandbox where organizations can simulate AI customer scenarios, test authentication protocols, and redesign workflows before chaos hits production systems.

But the window for preparation is closing fast. Analysts predict widespread AI customer adoption by 2028. Kay believes it will happen sooner. “If you see an organization like Kickoff create an application that serves a purpose of good, contact centers have got to be ready sooner than 2028.”

The inversion is here. The question isn’t whether AI will become your customer – it’s whether you’ll be ready when it calls.

Watch the full video interview with Wayne Kay to understand what’s breaking, what’s at risk, and how to prepare your contact center for AI customers in 2026.