I arrived in Las Vegas expecting the usual conference promises about artificial intelligence. What I found instead were practitioners talking how they address the gap between what AI can do and what contact centers need.

AWS re:Invent is vast—over 60,000 people scattered across multiple venues. But in the Amazon Connect sessions, away from the main stage theatrics, something more interesting was happening. People were being rather honest about what’s actually blocking agentic AI deployment.

It wasn’t capability. It was trust. And trust, it turns out, requires visibility.

The Fear Problem Is Real (And Observability Might Solve It)

The most striking conversation I had was about flight simulators.

Mike Wallace, Americas Solutions Architecture Leader for Amazon Connect, described observability as “a flight simulator for agentic AI.” Pilots train in simulators because the consequences of learning in real aircraft are unacceptable. Contact center leaders face a similar problem: how do you learn to trust AI agents when every interaction affects real customers?

“Take that same care of your Agentic AI… apply the same logic that you put against your human agents into your agent AI, and you’ll be successful.”

— Mike Wallace, Amazon Connect

The answer is that you need to see everything. Not summaries. Not aggregate success rates. Actual visibility into what the AI agent said, why it said it, and where the handoff to a human happened.

Wallace outlined Amazon Connect’s approach: a “Flight Recorder” for reasoning that shows the chain of thought, pre-flight stress testing to run thousands of simulations, and real-time detection of customer frustration with instant handoff to supervisors.

“You can’t just put your API at a Large Language Model and hope for the best.”

Leaders know the technology works in demos. What they don’t know is whether they’ll spot when it stops working in production, before customers do. That’s where Amazon Connect committed last week to being different.

Read more: The Flight Simulator for Agentic AI →

You Don’t Have to Choose Between Human and Agentic

It is rather odd that the conversation around AI in contact centers has become so binary. Robots or humans. It feels like a zero-sum game.

But Pasquale DeMaio, Vice President of Amazon Connect, calls it the “gentle continuum.” During his keynote, he outlined a vision where businesses can be 100% agentic, 100% human, or—more likely—somewhere messy and effective in the middle.

“We believe that AI and people will get a lot better together. You want that true concierge experience that brings all the context up front… all the way to the agent to make them superhuman.”

— Pasquale DeMaio, Amazon Connect

What’s actually happening is a redistribution of work. AI handles password resets and order status. Humans get the complex, emotional, high-value conversations. DeMaio’s advice is pragmatic: “Pick a workload. Move fast, learn, iterate.”

The proof came from Centrica’s James Boswell. By using AI to analyze transcripts, they reduced talk time by 30 seconds. But they didn’t bank the savings—they used the extra time to cross-sell. They also saw a 28% reduction in complaints and an 89% increase in NPS.

When you remove the cognitive load from agents, they become much better at actually talking to people.

Read more: You Don’t Have to Choose Between Human and Agentic →

The Revenue Surprise Nobody Expected

Here’s something I didn’t anticipate: the first measurable benefit of agentic AI isn’t efficiency. It’s revenue.

Ayesha Borker and Jack Hutton from Amazon Connect explained that when AI agents handle routine inquiries, human agents have more time per interaction. More time means better discovery of customer needs. Better discovery means more sales.

Amazon has been rehearsing for this since 1998, when they launched their recommendation engine. Now they’re lifting that entire infrastructure—”Frequently Paired,” “Trending Now,” “Recommended for You”—and dropping it into Amazon Connect.

Organizations implementing this are seeing a 10-15% lift in revenue. GoStudent saw a 20% increase in engagement.

This matters for budget conversations. Cost reduction is a hard sell—it implies job losses. Revenue generation is easier to approve. If agentic AI pays for itself through increased sales rather than reduced headcount, the business case changes entirely.

Read more: Turning the “Sorry about that” Department into the “You might like this” Department →

The Robot Pause Is a UX Problem

There’s a moment in most AI voice interactions where you can tell you’re talking to a machine. It’s the pause. The slightly-too-long gap between when you finish speaking and when the AI responds.

Amazon announced Nova Sonic at re:Invent: sub-200 millisecond latency, natural interruption handling, emotional tone recognition. Keith Ramsdell explained:

“With Nova Sonic, you’re not just able to understand what the customer is saying, but how they say it.”

— Keith Ramsdell, Amazon Connect

Two hundred milliseconds is faster than human reaction time. AI voice agents won’t feel slower than humans anymore. They might feel more responsive.

This is a UX problem disguised as a technology problem. Customers don’t distrust AI because it’s artificial—they distrust it because it feels awkward. Nova Sonic addresses the awkwardness directly by processing audio directly and keeping the emotional data that usually gets lost in translation.

Read more: Amazon Nova Sonic: The End of the “Robot Pause” in CX? →

What Wall Street Sees

There was a curious undercurrent nobody mentioned in sessions but everyone discussed in hallways: legacy vendor stability.

Zeus Kerravala from ZK Research was looking at balance sheets while everyone else looked at features.

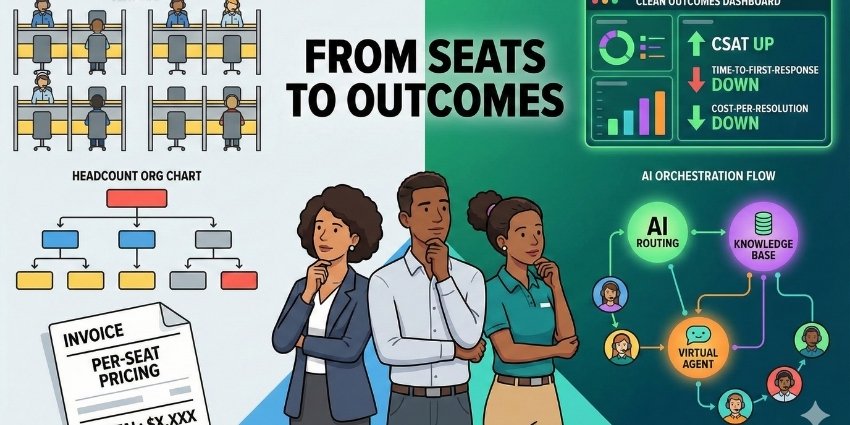

“The investor community doesn’t like the space. They hate it because they know this disruption is coming… What does that do to an industry that’s heavily reliant on selling $100 agent seats? I think it’s maybe the biggest disruption we’ve seen.”

— Zeus Kerravala, ZK Research

AI agents aren’t humans. They’re code. Charging a salary for a script seems odd. But legacy vendors are stuck—they have to change to survive, but their investors hate change. Consumption models are spiky, and that uncertainty terrifies Wall Street.

“I’m not evaluating features anymore. I’m evaluating which vendors will still exist in three years.”

Read more: Why Wall Street is Suddenly Nervous About Your Contact Center Vendor →

What I’m Taking Home

AWS re:Invent 2025 felt like the week agentic AI stopped being theoretical for contact centers. Not because the technology improved—it’s been capable for a while—but because the supporting infrastructure finally caught up.

Observability gives leaders confidence to deploy. Nova Sonic removes UX awkwardness. The human-agentic hybrid provides a narrative that doesn’t require job elimination. Revenue opportunities give finance teams a reason to approve budgets.

But there’s a deeper pattern here that explains why all these pieces came together at once.

“As CCaaS evolves toward full customer-experience orchestration, the advantage increasingly lies in the underlying infrastructure and how easily platforms can connect to the wider ecosystem to support seamless end-to-end workflows. Amazon Connect stands out because it runs on AWS’s infrastructure, delivering unique benefits in efficiency, scalability, and pricing, while advancing in lockstep with AWS’s continuous innovation.”

— Oru Mohiuddin, Research Director, Enterprise Communications and Collaboration, IDC

That’s precisely what I witnessed throughout the week. Amazon Connect isn’t competing on features alone anymore—it’s competing on the infrastructure layer that makes everything else possible.

The conversations reflected this maturity. Less speculative. More grounded in actual deployments and measurable outcomes. People were comparing implementation strategies instead of debating whether to implement at all.

That shift—from “should we?” to “how do we?”—might be the most significant thing that happened at AWS re:Invent 2025.

Visit our complete AWS re:Invent 2025 Event Hub for all coverage and exclusive interviews.