It is February 2026. The year of experimentation is over, and the year of enforcement has begun.

For the last two years, Customer Experience (CX) leaders have been told to get ready for the EU AI Act. We attended webinars, downloaded whitepapers, and nodded along to legal experts. It all felt theoretical, or like a problem for the Legal team to solve “later.”

‘Later’ has arrived.

With the August 2026 deadline for high-risk AI systems looming, the conversation has shifted violently. We have moved from legal theory to operational triage. The question is no longer What does the law say?, it is Which of our AI pilots are we killing because we can’t afford the compliance bill?

If you think this doesn’t apply to you because you’re based in the US or Asia, you are mistaken. The Brussels Effect has taken hold. The operational reality of CX is about to change forever.

The Great Abandonment: Why Pilots Are Dying

The most immediate impact of the Act isn’t a fine; it’s a freeze.

In late 2025, Gartner issued a stark warning. They predicted that organizations will abandon a large share of AI projects through 2026.

The reason is simple. These projects aren’t supported by ‘AI-ready data’ or adequate governance.

For CX leaders, this is the ‘Abandonment’ phase. Many of the pilots launched in the hype cycle of 2024 are now facing a hard audit. These include biometric sentiment analysis and unchecked generative summaries.

When you calculate the cost of strict data governance, the ROI on these ‘cool’ projects often collapses. Gartner Top Strategic Technology Trends for 2026 cited:

‘The cost of compliance for high-risk AI is forcing companies to kill ROI-negative pilots.’

This isn’t just about cutting costs. It is about survival.

Forrester calls this the ‘Trust Reckoning.’ In their 2026 predictions, they note that buyers are now demanding ‘proof over promises.’

The days of buying a ‘black box’ AI solution from a startup are gone. If you cannot show the lineage of your data, you simply won’t close the deal.

Forrester predicts that 30% of large enterprises will mandate “AI fluency” training. This is the only way to mitigate these compliance risks.

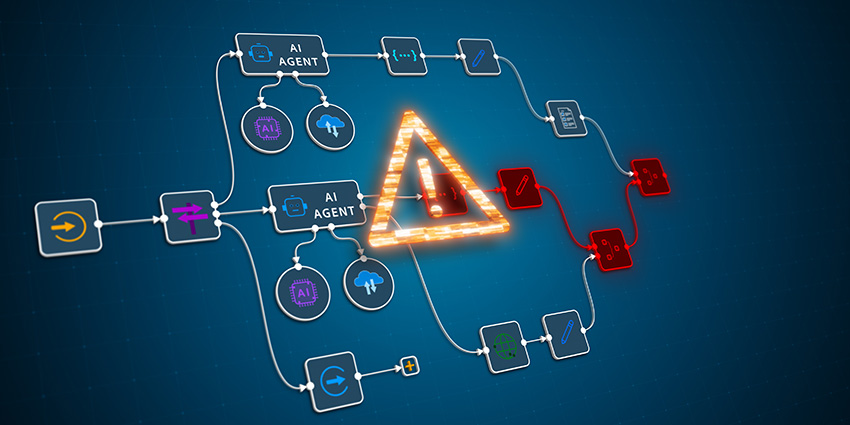

The ‘Agentic’ Shift and the Transparency Trap

The stakes have risen because the technology has changed. We aren’t just using ‘predictive text’ anymore. We are deploying autonomous agents.

Under the EU AI Act, transparency is non-negotiable. If a machine is talking to a human, the human must know.

This sounds simple until you apply it to the complex voice bots currently flooding the market.

Voice AI vendor Retell AI identified ‘Compliance’ as a top trend for 2026. They specifically noted that voice bots must announce they are AI immediately.

This kills the ‘Turing test’ marketing strategy. Deception is now a liability.

Major players are already moving to protect themselves. Following the August 2025 deadline for General Purpose AI (GPAI) models, Salesforce released strict guidance.

They explicitly addressed the new transparency obligations for their Agentforce autonomous agents and aren’t just asking users to trust them. They are publishing deep compliance guides to prove their agents meet ‘high transparency’ tiers.

Christian Kleinerman, EVP Product at Snowflake, argues that this shift forces companies to stop treating AI as a mystery box.

“With the regulatory landscape taking shape, businesses must place a greater focus on transparency, traceability, and auditability. For many organizations, the biggest challenge isn’t the models themselves, but understanding and governing how AI systems interact with sensitive data and business processes.”

Governance as Infrastructure

So, how do you survive the reckoning? You stop treating compliance as a spreadsheet exercise.

OneTrust’s 2026 manifesto argues that governance must become infrastructure.

Thousands of AI agents make millions of micro-decisions daily in a contact center. Manual compliance is impossible. You cannot check the work of an autonomous fleet with a clipboard.

We are seeing a flight to safety in the vendor landscape. Genesys recently achieved ISO/IEC 42001:2023 certification.

This move effectively productizes compliance. By building a certified management layer, they offer a shield to their customers.

This aligns with what Adam Spearing, VP of AI GTM at ServiceNow EMEA, calls “governed acceleration.”

“Reactive AI governance is a hindrance; proactive AI governance is an accelerator to business value. The next challenge is what I call ‘governed acceleration’ – operationalizing these rules by embedding governance directly into everyday workflows. Clear rules don’t slow innovation, but rather prevent the technical debt that comes from bolting on governance after the fact.”

The message is clear. If your governance isn’t automated and embedded in the platform, it doesn’t exist.

The Legal Minefield: Shadow AI and the Extension Mirage

A dangerous rumor is circulating in legal circles. The deadline might be extended.

It is true that late in 2025, the European Commission proposed a Digital Omnibus on AI. Cooley reported that this proposal suggested extending the deadline for certain high-risk systems to December 2027.

Do not bet your company on this.

As legal experts point out, this is a proposal, not a law. Relying on a potential delay creates a dual-speed planning nightmare. If the extension fails, you will hit the August 2026 wall at full speed.

Furthermore, the threat isn’t just from the EU regulators. It is from your own employees.

Baker Donelson warns that 2026 will be the year of enforcement over experimentation. They highlight a specific focus on Shadow AI.

In a CX context, Shadow AI is the agent who copies a customer email into a free, unapproved LLM. They just want a quick summary.

However, that data has now left your secure perimeter. Under the new laws, that is a traceable, fineable breach.

Ian Jeffs, UK&I Country General Manager at Lenovo, highlights just how unprepared most organizations are for this reality.

“Our latest CIO research shows Europe is at an AI inflection point: while 57% of organizations are already approaching or in late-stage AI adoption, only 27% have a comprehensive AI governance framework in place.”

“The AI Act helps close that gap by reinforcing trust, accountability and transparency. This moment should be seen not just as a compliance deadline, but as an opportunity to embed responsible AI practices.”

The Global Standard: There Is No Hiding Place

Finally, we must address the Not in Europe fallacy.

We are a US company, so we’ll wait for US regulation. This is a failed strategy.

First, reports of a draft US Executive Order suggest the US is moving to align with EU principles. This is primarily to protect trade.

Second, South Korea finalized its AI Framework Act in January 2026. IAPP notes that this mirrors the EU’s risk-based approach.

The Brussels Effect has occurred. The EU AI Act is becoming the template for the world.

If you build a compliance strategy that meets the EU bar, you are likely safe globally. If you try to maintain different standards for different regions, you are creating an operational nightmare.

The Final Sprint

We have less than six months until the August 2026 deadline.

The time for “learning” is over. Now is the time for auditing.

Look at your CX stack. Identify the zombie pilots that are consuming data without governance. Kill them.

Look at your vendors. Demand proof of their certifications. If they can’t show you their ISO 42001 status, find a new vendor.

And look at your teams. Move them from Shadow AI users to Governed AI practitioners.

The EU AI Act isn’t coming to kill innovation. It is coming to kill lazy innovation.

As we stare down the barrel of August 2026, I am reminded that constraint breeds creativity. The companies that survive this transition won’t just be compliant. They will be the only ones trusted enough to do business.

Join the conversation: Join our LinkedIn community (40,000+ members): https://www.linkedin.com/groups/1951190/ Get the weekly rundown: Subscribe to our newsletter: http://cxtoday.com/sign-up